Learning A Deep Compact Image Representation for Visual Tracking (NIPS2013')

Naiyan Wang and Dit-Yan Yeung.

Abstract

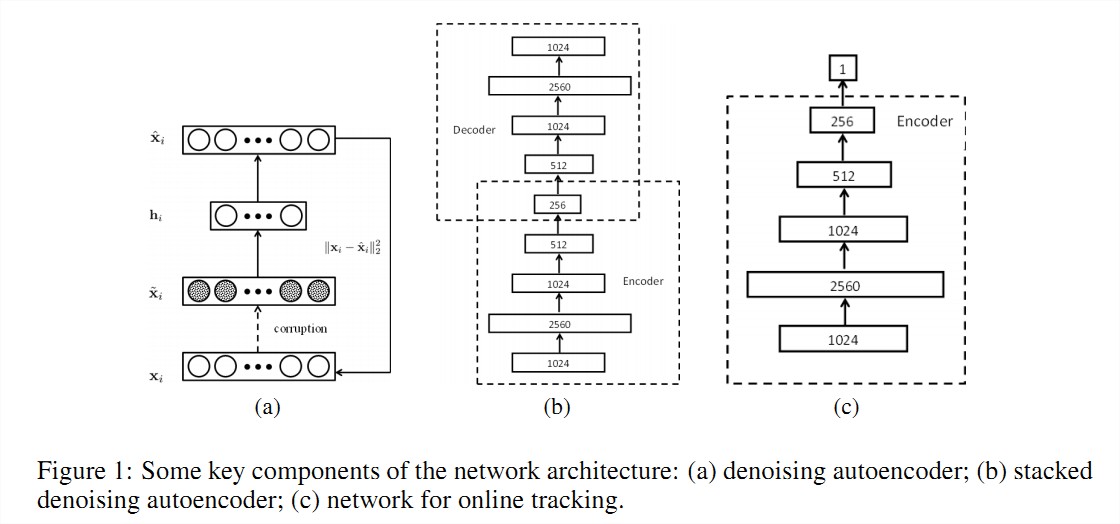

In this paper, we study the challenging problem of tracking the trajectory of a moving object in a video with possibly very complex background. In contrast to most existing trackers which only learn the appearance of the tracked object online, we take a different approach, inspired by recent advances in deep learning architectures, by putting more emphasis on the (unsupervised) feature learning problem. Specifically, by using auxiliary natural images, we train a stacked denoising autoencoder offline to learn generic image features that are more robust against variations. This is then followed by knowledge transfer from offline training to the online tracking process. Online tracking involves a classification neural network which is constructed from the encoder part of the trained autoencoder as a feature extractor and an additional classification layer. Both the feature extractor and the classifier can be further tuned to adapt to appearance changes of the moving object. Comparison with the state-of-the-art trackers on some challenging benchmark video sequences shows that our deep learning tracker is more accurate while maintaining low computational cost with real-time performance when our MATLAB implementation of the tracker is used with a modest graphics processing unit (GPU).

The codes are updated on 19/03/2014 with better accuracy and faster speed. If you downloaded before, please retry it.

[pdf] [MATLAB codes][Sample Data]

[BibTex]

The codes contain the offline training results, so it is huge, be patient :-)

More data could be found in: http://visual-tracking.net/

Related Project

Examples of learned filters in the first layer of the network. They are all Gabor like edge detector.

[BibTex] The codes contain the offline training results, so it is huge, be patient :-)

More data could be found in: http://visual-tracking.net/

Related Project

Examples of learned filters in the first layer of the network. They are all Gabor like edge detector.